Chapter 3 Directory Structure and Code Repositories

Contributors: Kunal Mishra, Jade Benjamin-Chung, Ben Arnold

The backbone of your project workflow is the file directory so it makes sense to spend time organizing the directory. Note that directory is the technical term for the system used to organize individual files. Most non-UNIX environments use a folder analogy, and directory and folder can be used interchangeably in a lot of cases. A well organized directory will make everything that follows much easier. Just like a well designed kitchen is essential to enjoy cooking (and avoid clutter), a well designed directory helps you enjoy working and stay organized in a complex project with literally thousands of related files. Just like a disorganized kitchen (“now where did I put that spatula?”) a disorganized project directory creates confusion, lost time, stress, and mistakes.

Another huge advantage of maintaining a regular/predictable directory structure within a project and across projects is that it makes it more intuitive. When a directory is intuitive, it is easier to work collaboratively across a larger team; everybody can predict (at least approximately) where files should be.

Nested within your directory will be a code repository. Sometimes we find it useful to manage the code repository using version control, such as git/GitHub.

Other chapters will discuss coding practices, data management, and GitHub/version control that will build from the material here.

Carrying the kitchen analogy further: here, we are designing the kitchen. Then, we’ll discuss approaches for how to cook in the kitchen that we designed/built.

3.1 Small and large projects

Our experience is that the overwhelming majority of projects come in two sizes: small and large. We recommend setting up your directory structure depending on how large you expect the project to be. Sometimes, small projects evolve into large projects, but only occasionally. A small project is something like a single data analysis with a single published article in mind. A large project is an epidemiologic field study, where there are multiple different types of data and different types of analyses (e.g., sample size calculations, survey data, biospecimens, substudies, etc.).

Small project: There is essentially one dataset and a single, coherent analysis. For example, a simulation study or a methodology study that will lead to a single article.

Large project: A field study that includes multiple activities, each of which generates data files. Multiple analyses are envisioned, leading to multiple scientific articles.

Large projects are more common and more complicated. Most of this chapter focuses on large project organization (small projects can be thought of as essentially one piece of a large project).

3.2 Directory Structure

In the example below, we follow a basic directory naming convention that makes working in UNIX and typing directory statements in programs much easier:

- short names

- no spaces in the names (not essential but a personal preference. Can use

_or-instead) - lower case (not essential, again, personal preferences vary!)

For example, Ben completed a study in Tamil Nadu, India during his dissertation to study the effect of improvements in water supply and sanitation on child health. Instead of naming the directory Tamil Nadu or Tamil Nadu WASH Study, he used trichy instead (a colloquial name for the city near the study, Tiruchirappalli), which was much easier to type in the terminal and in directory statements. A short name helps make directory references easier while programming.

3.2.1 First level: data and analyses

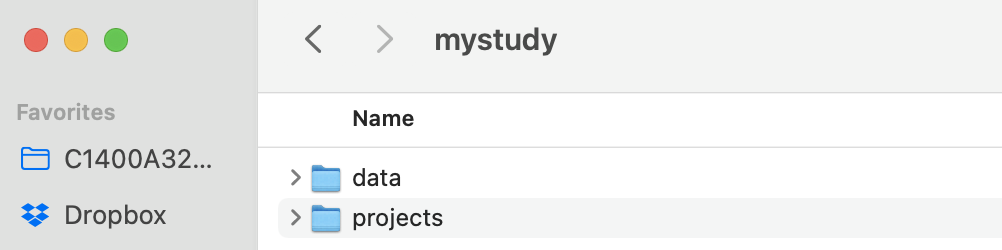

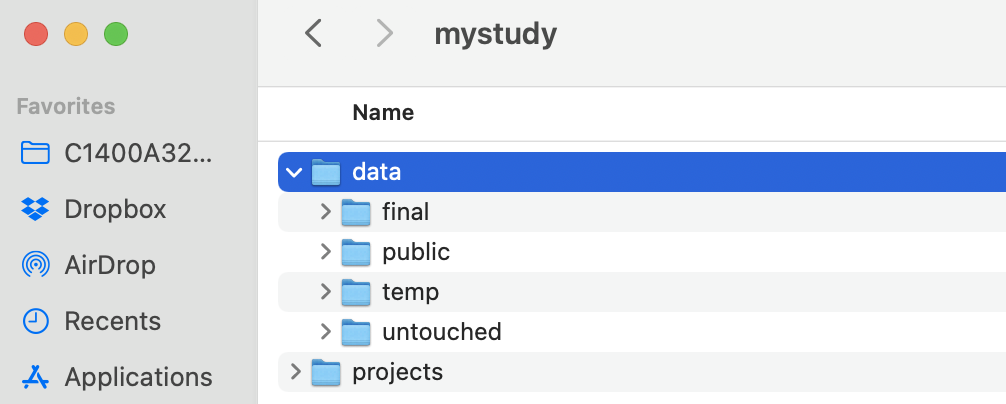

Figure 3.1: Example directory for mystudy

Start by dividing a project into major activities. In the example abpve, the project is named mystudy. There is a data subdirectory (more in a sec), and then three major activities, each corresponding to a separate analysis: primary-analysis,secondary-analysis-1, and secondary-analysis-2. In a real project, the names could be more informative, such as “trachoma-qpcr”. Also, a real project might also include many additional subdirectories related to administrative and logistics activities that do not relate to data science, such as irb, travel, contracts, budget, survey forms, etc.).

Dividing files into major activities helps keep things organized for really big projects. In a multi-site study, consider including a directory for each site before splitting files into major activities. Ideally, analyses will not span major activity subdirectories in a project folder, but sometimes you can’t predict/avoid that from happening.

3.2.2 Second level: data

Each project will include a data directory. We recommend organizing it into 3 parts: untouched, temp, and final. Often, it is useful to include a fourth subdirectory called public for sharing public versions of datasets.

The untouched directory includes all untouched datasets that are used for the study. Once saved in the directory never touch them; you will read them into the work flow, but never, ever save over them. If the study has repeated extracts from rolling data collection or electronic health records, consider subdirectories within untouched that are indexed by date.

The temp directory (optional, not essential) includes temporary files that you might generate during the data management process. This is mainly a space for experimentation. As a rule, never save anything in the temp directory that you cannot delete. Regularly delete files in the temp directory to save disk space.

The final directory includes final datasets for the activity. Final datasets are de-identified and require no further processing; they are clean and ready for analysis. They should be accompanied by meta-data, which at minimum includes the data’s provenance (i.e., how it was created) and what it includes (i.e., level of the data, plus variable coding/labels/descriptions). Clean/final datasets generated by one analysis might be reused in another.

NOTE: In very large projects, we occasionally need to further stratify the data directory into different subdirectories, according to data type. For example, in the AVENIR trial we had child mortality data measurements, and completely separate data from antimicrobial resistance (AMR) monitoring. These data were so myriad and different, that they needed to be curated in separate directories.

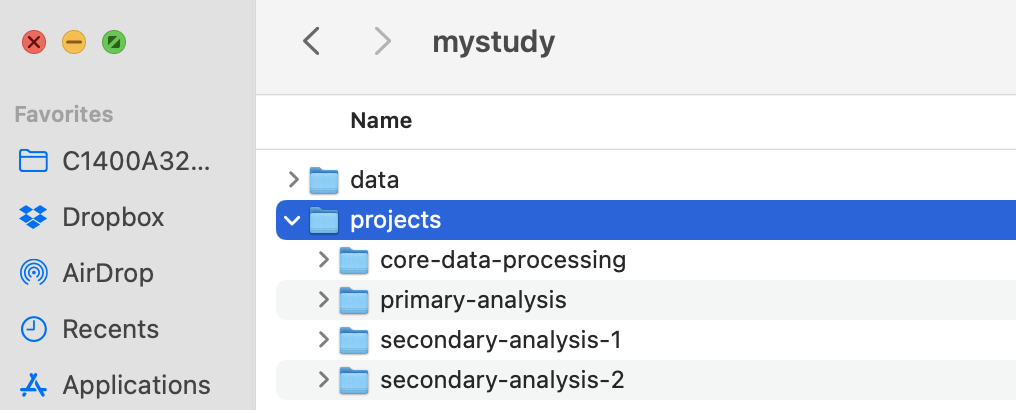

3.2.3 Second level: projects

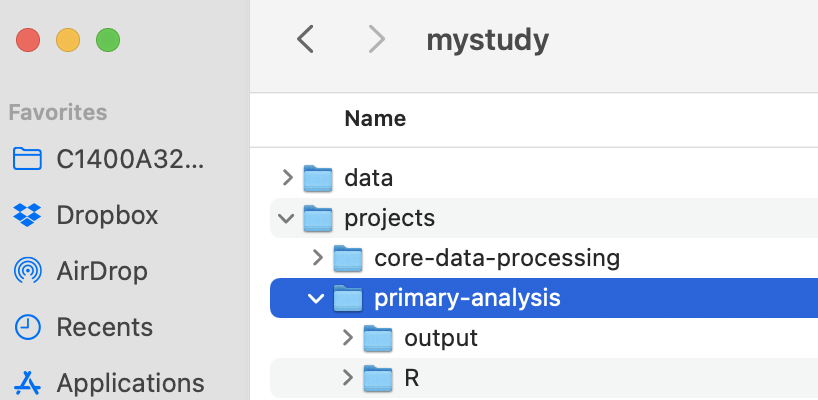

We recommend maintaining a separate project subdirectory to hold each major analysis in a project. In this example, there are four workflows with generic names from the view of trial: core-data-processing, primary-analysis, secondary-analysis-1, secondary-analysis-2.

IMPORTANT: We recommend consolidating the core data processing for the study in its own project. Core data processing is data processing that creates a shared asset across multiple, downstream analyses. It includes the scripts for the data processing workflow that take data from untouched to final in the previous section.

Think of each analysis as the scope of all of the work for a single, published paper. We recommend dividing the analysis project into a space for computational notebooks / scripts (i.e., a code repository), and a second for their output. The reason for the split is to make it easier to use version control (should you choose) for the code. Version control like git and GitHub (see the Chapter on GitHub) works well for text files but isn’t really designed for binary files such as images (.png), datasets (.rds), or PDF files (.pdf). It is certainly possible to use git with those file types, but since git makes a new copy of the file every time it is changed the git repo can get horribly bloated and takes up too much space on disk. Consolidating the output into a separate directory makes it more obvious that it isn’t under version control. In this example, there are separate parts for code (R) and output (output). Output could include figures, tables, or saved analysis results stored as data files (.rds or .csv). Another conventional name for the code repository is src as an alternative to R if you use other languages.

If a project creates unique datasets or relies on new data that is unique to the project and not a shared asset for the study (e.g., publicly available data), then the project itself might include a data subdirectory. HOWEVER, it should not include a direct copy of shared datasets stored in the mystudy/data repository – be sure to keep only one copy of each shared dataset in the project.

Interdependence between analyses: Sometimes a result from an analysis might be a cleaned dataset that could feed into future, distinct analyses. This is quite common, for example, in large trials where a set of baseline characteristics might be used in multiple separate papers for different endpoints, either for assessing balance of the trial population or subgroups, or used as adjustment covariates in additional analyses of the trial. In this case, the analysis-specific data processing should be moved upstream into the projects/core-data-processing workflow, and should generate a shared, final dataset in the data/final directory.

3.3 Code Repositories

Maintain a separate code repository for each major analysis activity (last section).

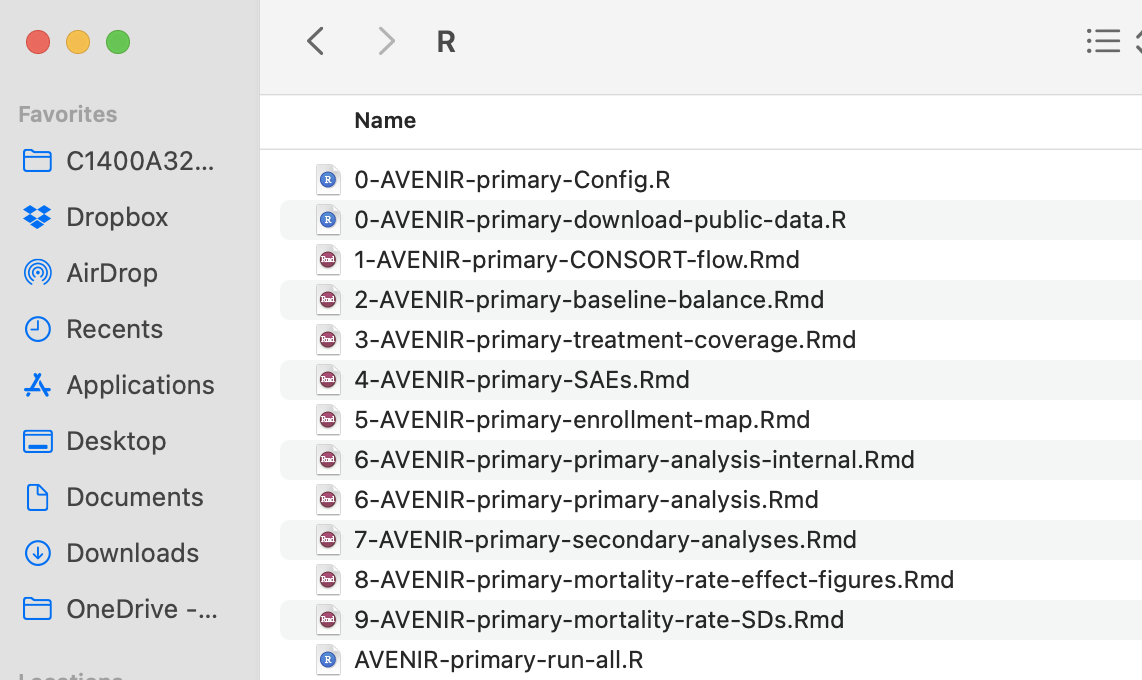

We recommend the following structure for a code repository. This is the actual repository for the AVENIR trial primary analysis.

Note that in this example, there is no actual data processing in the project. All of the data processing was consolidated upstream in a separate core-data-processing project for the trial. Sometimes, an analysis/project will include limited project-specific data processing that is not shared across the trial. If project-specific data processing is required, it would be one of the first scripts in the workflow. This helps ensure work conducted in step 1 of your workflow stays upstream from all analyses (see Chapter on workflows).

In this example, there is a script that downloads public datasets into a local repository instead. Also note that in this example, all of the scripts are .Rmd files. R scripts .R are equally useful.

You can glean some important takeaways from what you do see.

3.3.1 .Rproj files

An “R Project” can be created within RStudio by going to File >> New Project. Depending on where you are with your research, choose the most appropriate option. This will save preferences, working directories, and even the results of running code/data (though we recommend starting from scratch each time you open your project, in general). Then, ensure that whenever you are working on that specific research project, you open your created project to enable the full utility of .Rproj files. This also automatically sets the directory to the top level of the project.

3.3.2 Configuration (‘config’) File

This is the single most important file for your project. It will be responsible for a variety of common tasks, declare global variables, load functions, declare paths, and more. Every other file in the project will begin with source("0-config"), and its role is to reduce redundancy and create an abstraction layer that allows you to make changes in one place (0-config.R) rather than 5 different files. To this end, paths that will be referenced in multiple scripts (e.g., a clean_data_path) can be declared in 0-config.R and simply referred to by its variable name in scripts. If you ever want to change things, rename them, or even switch from a downsample to the full data, all you would then to need to do is modify the path in one place and the change will automatically update throughout your project. See the example config file for more details. The paths defined in the 0-config.R file assume that users have opened the .Rproj file, which sets the directory to the top level of the project.

This GitHub repository that has replication files for this study includes an example of a streamlined config.R file, with all packages loaded and directory references defined.

3.3.4 Order Files and Subdirectories

This makes the jumble of alphabetized filenames much more coherent and places similar code and files next to one another. Although sometimes there is not a linear progression from 1 to 2 to 3, in general the structure helps reflect how data flows from start to finish.

If you take nothing else away from this guide, this is the single most helpful suggestion to make your workflow more coherent. Often the particular order of files will be in flux until an analysis is close to completion. At that time it is important to review file order and naming and reproduce everything prior to drafting a manuscript.

3.3.5 Use Bash scripts or R scripts to ensure reproducibility

Bash scripts are useful components of a reproducible workflow. If your workflow is in R scripts, bash (.sh) can be useful to run the entire workflow in order. See the UNIX Chapter for further details. If your workflow is mainly R markdown or a mix of .R and .Rmd files, sometimes it is easier to simply make a single R script to run the workflow. In the AVENIR example (above), that is what we used AVENIR-primary-run-all.R.

3.3.6 Alternative approach for code repos

Another approach for organizing your code repository is to name all of your scripts according to the final figure or table that they generate for a particular article. In our experience, this only works for small projects, with a single set of coherent analyses. Here, you might have an alternative structure such as:

.gitignore

primary-analysis.Rproj

0-config.R

0-shared-functions.R

0-primary-analysis-run-all.sh

1-dm /

0-dm-run-all.sh

1-format-enrollment-data.R

2-format-adherence-data.R

3-format-LAZ-measurements.R

Fig1-consort.Rmd

Fig2-adherence.Rmd

Fig3-1-laz-analysis.Rmd

Fig3-2-laz-make-figure.RmdThere is still a need for a separate data management directory (e.g., dm) to ensure that workflow is upstream from the analysis (more below in chapter on UNIX), but then scripts are all together with clear labels. If a figure requires two stages to the analysis, then you can name them sequentially, such as Fig3-1-laz-analysis.Rmd, Fig3-2-laz-make-figure.Rmd. There is no way to divine how all of the analyses will neatly fit into files that correspond to separate figures. Instead, they will converge on these file names through the writing process, often through comsolidation or recombination.

One example of a small repo is here: https://github.com/ben-arnold/enterics-seroepi